Lmao who did this? pic.twitter.com/0puvY5EjCN

— Not Jerome Powell (@alifarhat79) June 8, 2025

Lmao who did this? pic.twitter.com/0puvY5EjCN

— Not Jerome Powell (@alifarhat79) June 8, 2025

Watch video here

This is a quick opinion piece to highlight what I think is a very serious flaw in New Zealand’s ‘Healthier homes’ regulations that come into force July 1.

‘Healthier Homes’ Nanny State legislation means more New Zealanders sleeping on the streets…

Read this article… Healthy Homes Standards | Are You Ready for 1 July 2025?

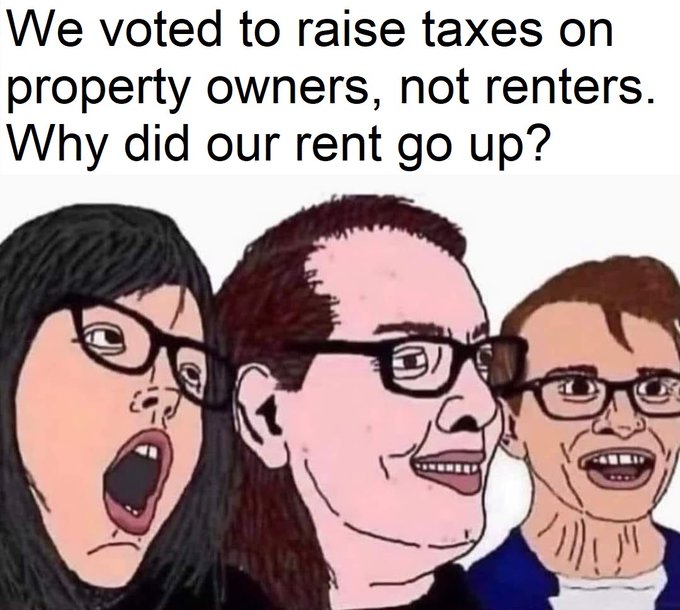

The fundamental problem with Socialism is that there are always un-intended negative consequences when Government seeks to use coercion/ compulsion even for supposed well intended ends.

In this case the intention is to ‘force’ Landlords to improve their rental properties… making them ‘healthier’ for tenants.

Sounds great if you don’t consider the negative results.

Firstly it assumes Landlords don’t care about their tenants.. or their asset… their rental properties… or the ability of Tenants and Landlords to negotiate their own agreements.

There are many reasons why a landlord may prefer to defer improving the condition of a rental property, and charge lower rents.

Mostly the reasons are economic realties.

Some might say that’s proof that landlords are greedy, yet let me tell you there are real economic and other benefits for tenants too!

We all now that renting out a house entails some risk to the landlords property, Carpets get ruined, wall can get holes in them, dogs chew on wood, etc.

If a house is a bit ruff, the risk is lower, and therefore landlords are more likely to charge lower rents and be more open to renting their houses to families with pets and children.

If they are forced to spend money upgrading the property they will have to be more strict on who they rent their homes to… because they have more to lose should pets and children damage wallpaper, doors, etc.

Rents will have to increase to cover the expenses of the improvements… heat pumps, insulation, etc… and these rent increases make it less affordable for low income families to be able to afford the rent.

For many Landlords the new standards will make math/economic viability of brining the house up to the new standards ‘untenable’ … and thousands of homes that could have been available for cheap rent will be left empty or demolished.

With less choices, less houses for rent. Rent must increase dramatically.

That’s the Law of supply and demand.

To build new rental housing will be more expensive and thus rents will remain higher.

This will mean the very people this socialist legislation was supposed to ‘help’… will be the ones most adversely affected… and many will end up sleeping ruff.

All because of wooly headed Socialist politicians who hate landlords…. hate private property.

In fact it is no stretch of the imagination to believe these socialists *want* to create a housing crisis so that they can claim ‘capitalism is failing’ and therefore this is a ruse to justify creating more State housing… *This is Creeping Communism by political design*.

When I was a very young Engineering apprentice living in West Auckland in the mid 80s, I flatted in what many people today would consider sub-standard conditions.

I lived in several sheds, an unfinished shell of a house, and in many ‘dumps’… houses that were on their last legs… often with other young bogans.

I even stayed for a few months in ‘Tui Glen trailer Park!

I must say those were great times, and I appreciated every one of those dwellings. They were only temporary, and I could afford to stay there on my low apprentice wages, and Landlords did not mind that we were young hooligans who would have parties, and pin posters to the walls, never vacuum, etc etc…

These ‘Dumps’ were life-saving *homes* for us… and we were happy to be there.

Now these examples are a bit extreme… yet let me tell you even poor families would appreciate and prefer such places to sleeping in tents, and cars… or having to split up their families… rather than have their kids sleeping ruff.

It should also be understood that the State itself is notoriously guilty of being Slum landlords!

State housing is a form of welfare. Many people become ensnared in them… never seeking to find jobs. work hard, and eventually buy their own homes… thus state housing tends to entrench welfarism and poverty.

Many State houses become squalid, and citizens are forever paying for this welfare dependence through taxes.

That is what I wished to say here today.

I thought my experience as a low income young lout living in West Auckland in the 1980s was worth tabling in this dialogue.

It makes me sad to know so many awesome old houses will be demolished because of this short sighted foolish Nanny State legislation.

These Chardonnay Socialists have their heads in the clouds and don’t have a clue about the real world.

They claim they know what’s best, or acceptable for everyone.

Dangerous Pompous twits whose anti-freedom legislations impoverish and cripples our society.

I guarantee you most poor people living in houses that are to be condemned will prefer the Government to BUGGER OFF!

People living in such places learn how to make them tolerable, livable, and homely.

A bit of moisture on the inside windows was normal *for everyone* in the 70s,80s….

Mold can be cleaned with a little bleach… it does not have to be left to take over.

This legislation will be very grievous for poor rural communities in which whole villages will not comply.

People must take responsibility for themselves.

In my Libertarian opinion it’s not the governments job to write of homes that people are willing to rent.

It’s all relative.

Ironically because of this type of legislation we could even see the growth of ‘Homeless camps’… absolutely degrading and squalor!

*Now that is real poverty and a danger to health* .

My sojourn in some of West Auckland’s dives was short lived. I completed my Engineering apprenticeship and now own a mid-priced home in the Waikato.

Mortgage free.

My story is not that unique, yet I could easily imagine that had these laws been in place back in the 80s I may have found myself sleeping on the street.

Tim Wikiriwhi.

Christian libertarian.

A 2.5-year-old was given a year to live because she was dying from intestinal failure and withering away. Her mom put her on a carnivore diet and within 2 months, she came off of IV nutrition for the first time in her life.

Doctors were astonished. They had never seen anything like this. Nobody is supposed to survive this condition but the girl not only still lives, she has recovered and lives a normal life today as a 5-year-old.

Her mom,

@CHealthCollect

, wrote a book about MMIHS and her daughter’s journey and has been able to help some other children as well! She’s on X but much more active on TikTok under the same tag.

A 2.5-year-old was given a year to live because she was dying from intestinal failure and withering away. Her mom put her on a carnivore diet and within 2 months, she came off of IV nutrition for the first time in her life.

Doctors were astonished. They had never seen anything… pic.twitter.com/GBuA70mRM4

— 💯 Cary Kelly 💯 (@CaryKelly11) May 29, 2025

I reached these same conclusions independently of watching this video… yet it is always nice to hear one’s thoughts validated by others like this…. and of course these facts are obvious… esp to engineers (like myself) and mechanics. All the superfluous electronics is wildly out of hand… stratospherically so… Yet the power trippers want us to swallow this crap. To become assimilated in the madness… yet we have a choice… an obligation to resist.

Tim Wikiriwhi.

Libertarian Christian.

EPIC!!!!!! These boys do NZ proud. Stoner Rock does not get the accolades it deserves. It really is the next step past 70s prog rock and 90s Grunge. This is why Monster Magnet never achieved the commercial success they deserved. Legends!

If you wanna fight, you better believe you’ve got one! pic.twitter.com/Do1sz25Vr2

— Alex Jones (@RealAlexJones) May 26, 2025

From here: Sudden and Unexpected on X

Scott Adams, the creator of Dilbert, has stage 4 prostate cancer that has metastasized to his bones. He says “anti-vaxxers are clearly the winners. I did not end up in the right place.”

Scott Adams, the creator of Dilbert, has stage 4 prostate cancer that has metastasized to his bones. He says “anti-vaxxers are clearly the winners. I did not end up in the right place.” pic.twitter.com/J4XIMphsfD

— “Sudden And Unexpected” (@toobaffled) May 26, 2025

He needs to look outside Orthodox medicine for a cure for his cancer… There are many possibilities. Orthodox medicine is controlled by Big Pharma… and they don’t want there to be a cure for cancer because thats their meal ticket… to $Billions.

Check out these…

Anti-parasitic drug Fenbendazole kills Cancer.

6% Food grade Hydrogen peroxide as treatment for all cancers.